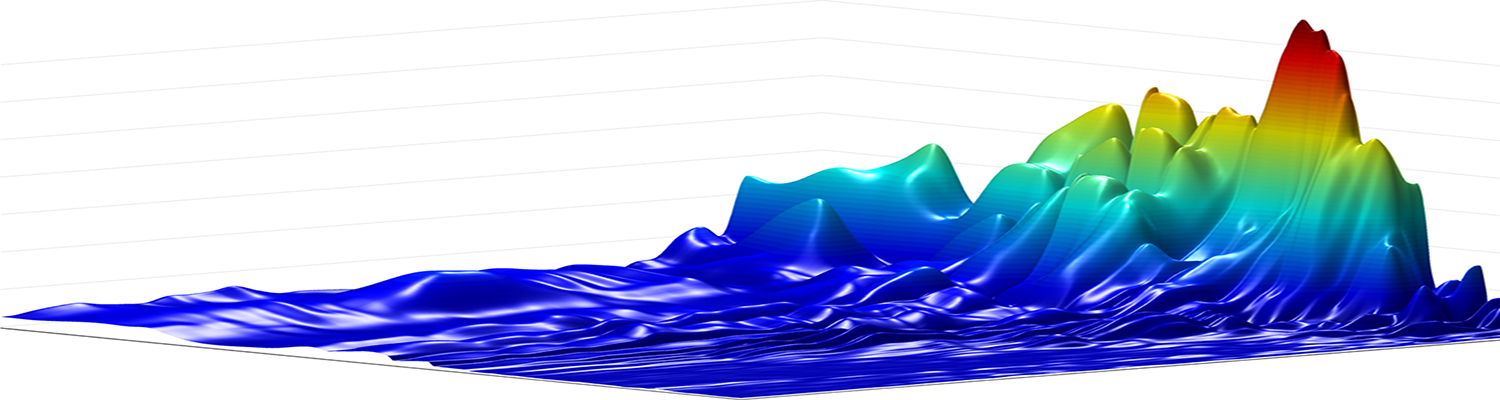

Fig 2 from Dicarlo Zoccolan Rust, 2012. Visual areas that are closer (fewer synapses) to retinal input don’t do a good job of separating the objects based on the object category in question, but the responses from areas a few extra synapses away can show responses that separate object categories.

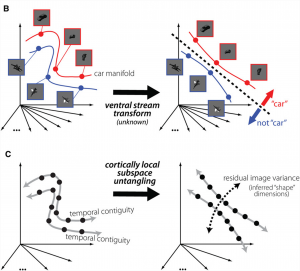

Perception is geared towards constructing ‘objects’ or ‘identities’ from the elements of sensory inputs. In vision, it means that energy hitting the retina in the form of wavelength, location on the retina and its rate of change, must be converted into meaningful information about who and what is around us by the brain. We perceive not only through signal coming via those retinal channels, but also based on complementary information from other sensory modalities, and also from our past – whether through gradual learning about objects in places or from recent history of what we’ve been looking at.

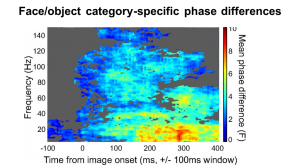

We take the stance that what is important for the organism is typically reflected by neural specializations. For social primates species, that includes understanding about other individuals, their roles and likely actions, and the wealth of information contained in their faces and through body language. The neural coding of faces and non-face objects takes place in elaborated chunks of cortical tissue, in addition to subcortical regions that are generally well-conserved across species. The coding mechanisms allow rapid categorization for some types of objects, as well as protracted responses that may help integrate visual inputs over time and across abrupt changes in retinal input that occur with saccadic eye movements.

Relevant lab publications:

- Turesson HK, Logothetis NK, Hoffman KL (2012) Category-selective phase coding in the superior temporal sulcus. Proceedings of the National Academy of Sciences. doi:10.1073/pnas.1217012109

- Leonard TK, Blumenthal G, Gothard KM, Hoffman KL (2012) How macaques view familiarity and gaze in conspecific faces. Behavioral Neuroscience. doi: 10.1037/a0030348

- Bartlett AM, Ovaysikia S, Logothetis NK and Hoffman KL (2011) Saccades during object viewing modulate oscillatory phase in the superior temporal sulcus. Journal of Neuroscience 31(50):18423-18432. doi:10.1523/JNEUROSCI.4102-11.2011

- Chau VL, Murphy EL, Rosenbaum RS, Ryan JD and Hoffman KL (2011) A flicker change detection task reveals object-in-scene memory across species. Frontiers in Behavioral Neuroscience. 5:58. doi: 10.3389/fnbeh.2011.00058

- Hoffman KL and Logothetis NK (2009) Cortical mechanisms of sensory learning and object recognition. Philosophical Transactions of the Royal Society B (364) 321-329.

- Huddleston WE and Hoffman KL (2008) Social cognition: LIP activity follows the leader. Current Biology 18(8): R344.

- Hoffman KL, Ghazanfar AA, Gauthier I, Logothetis NK (2008) Category-specific responses to faces and objects in primate auditory cortex. Frontiers in Systems Neuroscience doi:10.3389/neuro.06/002.2007

- Dahl CD, Logothetis NK, Hoffman KL (2007) Individuation and holistic processing of faces in rhesus monkeys. Proceedings of the Royal Society of London B: Biological Sciences 274: 2069-2076. doi: 10.1098/rsbp.2007.0477

- Hoffman KL, Gothard KM, Schmid MC, Logothetis NK. (2007) Facial-expression and gaze-selective responses in the monkey amygdala. Current Biology 17: 1–7. commentary by A J Calder and L Nummenmaa

- Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK (2005) Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. Journal of Neuroscience 25: 5004-5012.